This article is obsolete. A lot has changed since 2018, both the documentation and ADF contain a lot of key information, so I recommend that you refer to the official sources like “Delta copy”, or “ADF templates” link: https://docs.microsoft.com/en-us/azure/data-factory/solution-template-delta-copy-with-control-table

This post explains things that are difficult to find even in English. That’s why I will break my rule and will not write it in my native language! Po wersję polską zapraszam do google translate :>

Table of Contents

Introduction

Loading data using Azure Data Factory v2 is really simple. Just drop Copy activity to your pipeline, choose a source and sink table, configure some properties and that’s it – done with just a few clicks!

But what if you have dozens or hundreds of tables to copy? Are you gonna do it for every object?

Fortunately, you do not have to do this! All you need is dynamic parameters and a few simple tricks 🙂

Also, this will give you the option of creating incremental feeds, so that – at next run – it will transfer only newly added data.

Mappings

Before we start diving into details, let’s demystify some basic ADFv2 mapping principles.

- Copy activity doesn’t need to have defined column mappings at all,

- it can dynamically map them using its own mechanism which retrieves source and destination (sink) metadata,

- if you use polybase, it will do it using column order (1st column from source to 1st column at destination etc.),

- if you do not use polybase, it will map them using their names but watch out – it’s case sensitive matching!

- So all you have to do is to just keep the same structure and data types on the destination tables (sink), as they are in a source database.

Bear in mind, that if your columns are different between source and destination, you will have to provide custom mappings. This tutorial doesn’t show how to do it, but it is possible to pass them using “Get metadata” activity to retrieve column specification from the source, then you have to parse it and pass as JSON structure into the mapping dynamic input. you can read about mappings in official documentation: https://docs.microsoft.com/en-us/azure/data-factory/copy-activity-schema-and-type-mapping

String interpolation – the key to success

My entire solution is based on one cool feature, that is called string interpolation. It is a part of built-in expression engine, that simply allows you to just inject any value from JSON object or an expression directly into string input, without any concatenate functions or operators. It’s fast and easy. Just wrap your expression between @{ ... } . It will always return it as a string.

Below is a screen from official documentation, that clarifies how this feature works:

Read more about JSON expressions at https://docs.microsoft.com/en-us/azure/data-factory/control-flow-expression-language-functions#expressions

So what we are going to do? :>

Good question 😉

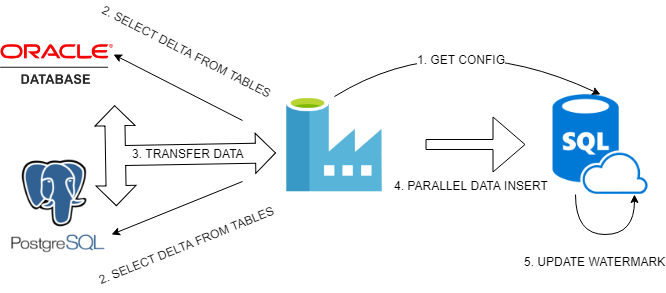

In my example, I will show you how to transfer data incrementally from Oracle and PostgreSQL tables into Azure SQL Database.

All of this using configuration stored in a table, which in short, keeps information about Copy activity settings needed to achieve our goal 🙂

Adding new definitions into config will also automatically enable transfer for them, without any need to modify Azure Data Factory pipelines.

So you can transfer as many tables as you want, in one pipeline, at once. Triggering with one click 🙂

Every process needs diagram :>

Basically, we will do:

- Get configuration from our config table inside Azure SQL Database using Lookup activity, then pass it to Filter activity to split configs for Oracle and PostgreSQL.

- In Foreach activity created for every type of database, we will create simple logic that retrieves maximum update date from every table.

- Then we will prepare dynamically expressions for SOURCE and SINK properties in Copy activity. MAX UPDATEDATE, retrieved above, and previous WATERMARK DATE, retrieved from config, will set our boundaries in WHERE clause. Every detail like table name or table columns we will pass as a query using string interpolation, directly from JSON expression. Sink destination will be also parametrized.

- Now Azure Data Factory can execute queries evaluated dynamically from JSON expressions, it will run them in parallel just to speed up data transfer.

- Every successfully transferred portion of incremental data for a given table has to be marked as done. We can do this saving MAX UPDATEDATE in configuration, so that next incremental load will know what to take and what to skip. We will use here: Stored procedure activity.

About sources

I will use PostgreSQL 10 and Oracle 11 XE installed on my Ubuntu 18.04 inside VirtualBox machine.

In Oracle, tables and data were generated from EXMP/DEPT samples delivered with XE version.

In PostgreSQL – from dvd rental sample database: http://www.postgresqltutorial.com/postgresql-sample-database/

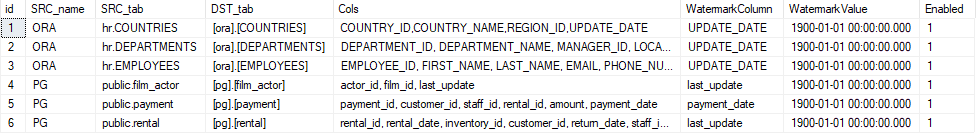

I simply chose three largest tables from each database. You can find them in a configuration shown below this section.

Every database is accessible from my Self-hosted Integration Runtime. I will show an example how to add the server to Linked Services, but skip configuring Integration Runtime. You can read about creating self-hosted IR here: https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime.

About configuration

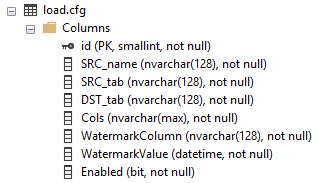

In my Azure SQL Database I have created a simple configuration table:

Id is just an identity value, SRC_name is a type of source server (ORA or PG).

SRC and DST tab columns maps source and destination objects. Cols defines selected columns, Watermark Column and Value stores incremental metadata.

And finally Enabled just enables particular configuration (table data import).

This is how it looks with initial configuration:

Create script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [load].[cfg]( [id] [SMALLINT] IDENTITY(1,1) NOT NULL, [SRC_name] [NVARCHAR](128) NOT NULL, [SRC_tab] [NVARCHAR](128) NOT NULL, [DST_tab] [NVARCHAR](128) NOT NULL, [Cols] [NVARCHAR](MAX) NOT NULL, [WatermarkColumn] [NVARCHAR](128) NOT NULL, [WatermarkValue] [DATETIME] NOT NULL, [Enabled] [BIT] NOT NULL, CONSTRAINT [PK_load] PRIMARY KEY CLUSTERED ( [id] ASC )WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY] ) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY] GO ALTER TABLE [load].[cfg] ADD CONSTRAINT [DF__cfg__WatermarkVa__4F7CD00D] DEFAULT ('1900-01-01') FOR [WatermarkValue] GO |

EDIT 19.10.2018

Microsoft announced, that now you can parametrize also linked connections!

Let’s get started (finally :P)

Preparations!

Go to your Azure Data Factory portal @ https://adf.azure.com/

Select Author button with pencil icon:

Creating server connections (Linked Services)

We can’t do anything without defining Linked Services, which are just connections to your servers (on-prem and cloud).

- Go to

and click

and click

- Find your database type, select and click

- Give all needed data, like server ip/host, port, SID (Oracle need this), login and password.

- You can

if everything is ok. Click Finish to save your connection definition.

if everything is ok. Click Finish to save your connection definition.

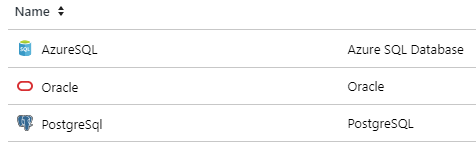

I have created three connections. Here are their names and server types:

Creating datasets

Creating linked services is just telling ADF what are connection settings (like connection strings).

Datasets, on the other hand, points directly to database objects.

BUT they can be parametrized, so you can just create ONE dataset and use it passing different parameters to get data from multiple tables within same source database 🙂

Source datasets

Source datasets don’t need any parameters. We will later use built-in query parametrization to pass object names.

- Go to

and click + and choose

and click + and choose

- Choose your datataset type, for example

- Rename it just as you like. We will use name: “ORA”

- Set proper Linked service option, just like this for oracle database:

- And that’s it! No need to set anything else. Just repeat these steps for every source database, that you have.

In my example, I’ve created two source datasets, ORA and PG

As you can see, we need to create also the third dataset. It will work as a source too, BUT also as a parametrizable sink (destination). So creating it is little different than others.

Sink dataset

Sinking data needs one more extra parameter, which will store destination table name.

- Create dataset just like in the previous example, choose your destination type. In my case, it will be Azure SQL Database.

- Go to

, declare one String parameter called “TableName”. Set the value to anything you like. It’s just dummy value, ADF just doesn’t like empty parameters, so we have to set a default value.

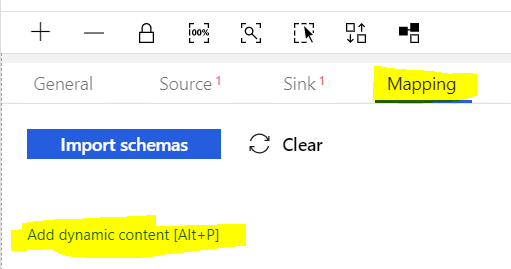

, declare one String parameter called “TableName”. Set the value to anything you like. It’s just dummy value, ADF just doesn’t like empty parameters, so we have to set a default value. - Now, go to

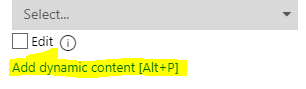

, set Table as dynamic content. This will be tricky :). Just click “Select…”, don’t choose any value, just click somewhere in empty space. The magic option “Add dynamic content” now appears! You have to click it or hit alt+p.

, set Table as dynamic content. This will be tricky :). Just click “Select…”, don’t choose any value, just click somewhere in empty space. The magic option “Add dynamic content” now appears! You have to click it or hit alt+p.

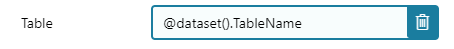

- “Add Dynamic Content” windows is now visible. Type: “@dataset().TableName” or just click “TableName” in “Parameters” section below “Functions”.

- The table name is now parameterized. And looks like this:

Parametrizable PIPELINE with dynamic data loading.

Ok, our connections are defined. Now it’s time to copy data :>

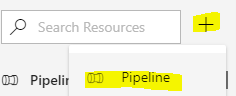

Creating pipeline

- Go to you ADF and click PLUS symbol near search box on the left and choose “Pipeline“:

- Reanme it. I will use “LOAD DELTA“.

- Go to Parameters, create new String parameter called ConfigTable. Set value to our configuration table name: load.cfg . This will simply parametrize you configuration source. So that in the future it would be possible to load a completely different set of sources by changing only one parameter :>

- In case you missed it, SAVE your work by clicking “Save All” if you’re using GIT or “Publish All” if not ;]

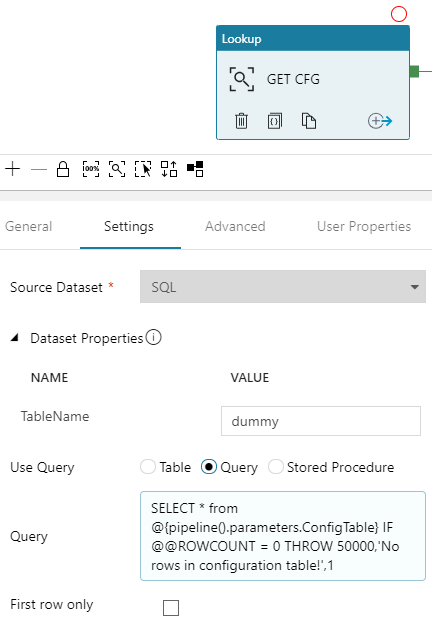

Creating Lookup – GET CFG

First, we have to get configuration. We will use Lookup activity to retrieve it from the database.

-

- Drag and drop

into your pipline

into your pipline - Rename it. This is important, we will use this name later in our solution. I will use value “GET CFG“.

- In “Settings” choose

- Now, don’t bother TableName set to dummy :> Just in “Use Query” set to “Query“, click “Add dynamic content” and type:

12SELECT * from @{pipeline().parameters.ConfigTable}IF @@ROWCOUNT = 0 THROW 50000,'No rows in configuration table!',1 - Unmark “First row only“, we need all rows, not just first. All should look like this:

- Drag and drop

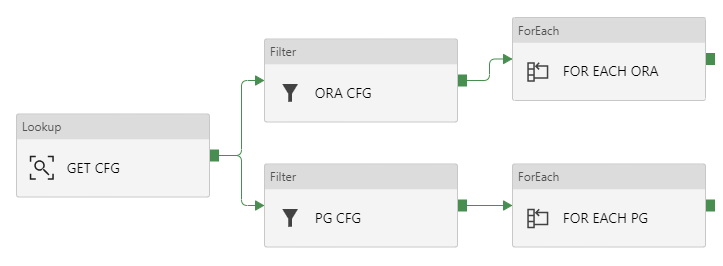

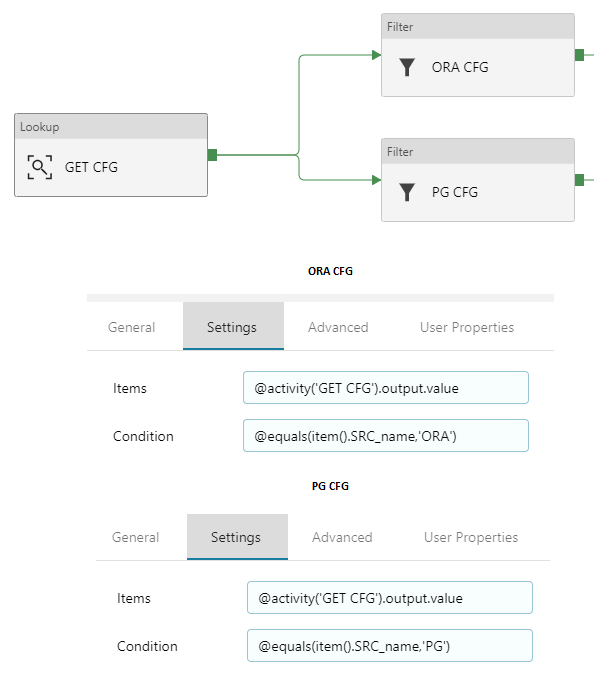

Creating Filters – ORA CFG & PG CFG

Now we have to split configs for oracle and PostgreSQL. We will use Filter activity on rows retrieved in “GET CFG” lookup.

- Drag and drop

twice.

twice. - Rename the first block to “ORA CFG“, second to “PG CFG“.

- Now go to “ORA CFG“, then “Settings“.

- In Items, click Add dynamic content and type: @activity('GET CFG').output.value . As you probably guess, this will point directly to GET CFG output rows 🙂

- In Condition, click Add dynamic content and type: @equals(item().SRC_name,'ORA') . We have to match rows for oracle settings. So we know, that there is a column in config table called “SRC_name“. We can use it to filter out all rows, except that with value ‘ORA’ 🙂 .

- Do the same with lookup activity “PG CFG“. Of course, change the value for a condition.

It should look like this:

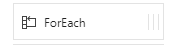

Creating ForEach – FOR EACH ORA & FOR EACH PG

Now it’s time to iterate over each row filtered in separate containers (ORA CFG and PG CFG).

- Drag and drop two

blocks, rename them as “FOR EACH ORA” and “FOR EACH PG“. Connect each to proper filter acitivity. Just like in this example:

blocks, rename them as “FOR EACH ORA” and “FOR EACH PG“. Connect each to proper filter acitivity. Just like in this example:

- Click “FOR EACH ORA“, go to “Settings“, in Items clik Add dynamic content and type: @activity('ORA CFG').output.value . We are telling ForEach, that it has to iterate over results returned in “ORA CFG”. They are stored in JSON array.

- Do this also in FOR EACH PG. Type: @activity('PG CFG').output.value

- Now, you can edit Activities and add only “WAIT” activity to debug your pipeline. I will skip this part. Just remember to delete WAIT block at the end of your tests.

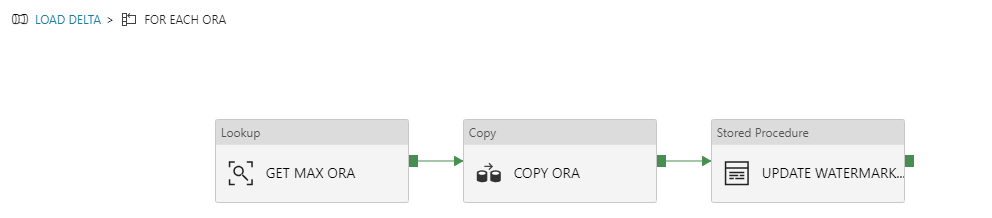

Inside ForEach – GET MAX ORA -> COPY ORA -> UPDATE WATERMARK ORA

Place these blocks into FOR EACH ORA. Justo go there, click “Activities” and then ![]()

And every column in that row, can be reached just by using @item().ColumnName .

Remember, that you can surround every expression in brackets @{ } to use it as a string interpolation. Then you can concatenate it with other strings and expressions just like that: Value of the parameter WatermarkColumn is: @{item().WatermarkColumn}

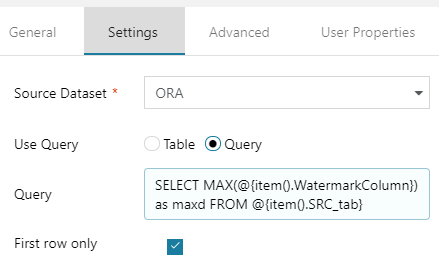

GET MAX ORA

- Go to “GET MAX ORA“, then Settings

- Choose your source dataset “ORA“, Use Query: “Query” and click Add dynamic content

- Type SELECT MAX(@{item().WatermarkColumn}) as maxd FROM @{item().SRC_tab} . This will get a maximum date in your watermark column. We will use it as RIGHT BOUNDRY for delta slice.

- Check if First row only is turned on.

It should look like this:

COPY ORA

Now the most important part :> Copy activity with a lot of parametrized things… So pay attention, it’s not so hard to understand but every detail matters.

Source

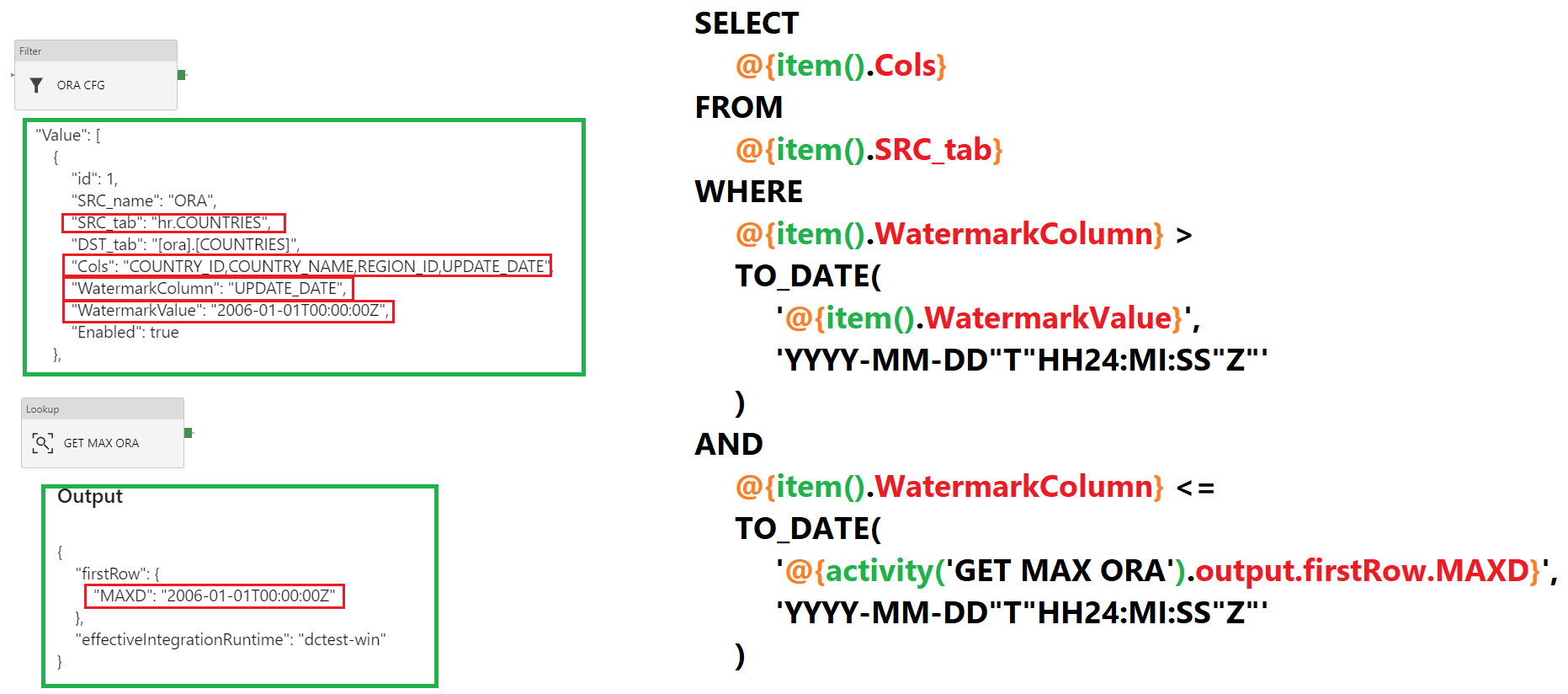

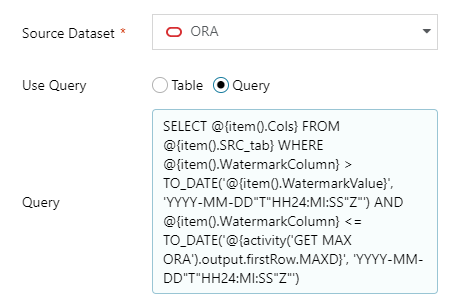

- In source settings, choose Source Dataset to ORA, in Use query select Query.

- Below Query input, click Add dynamic content and paste this:

12345678910SELECT@{item().Cols} FROM @{item().SRC_tab}WHERE@{item().WatermarkColumn} >TO_DATE('@{item().WatermarkValue}', 'YYYY-MM-DD"T"HH24:MI:SS"Z"')AND@{item().WatermarkColumn} <=TO_DATE('@{activity('GET MAX ORA').output.firstRow.MAXD}', 'YYYY-MM-DD"T"HH24:MI:SS"Z"')

Now, this needs some explanation 🙂

- ORA CFG output has all columns and their values from our config.

- We will use SRC_tab as table name, Cols as columns for SELECT query, WatermatkColumn as LastChange DateTime column name and WatermarkValue for LEFT BOUNDRY (greater than, >).

- GET MAX ORA output stores date of a last updated row in the source table. So this is why we are using it as a RIGHT BOUNDRY (less than or equal, <=)

- And the tricky thing, ORACLE doesn’t support implicit conversion from the string with ISO 8601 date. So we need to extract it properly with TO_DATE function.

So the source is a query from ORA dataset:

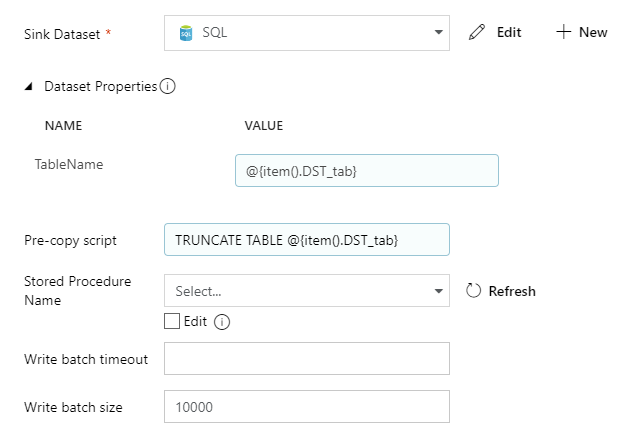

Sink

Sink is our destination. Here we will set parametrized table name and truncate query.

- Select

- Parametrize TableName as dynamic content with value: @{item().DST_tab}

- Also, do the same with Pre-copy script and put there: TRUNCATE TABLE @{item().DST_tab}

It should look like this:

Mappings and Settings

All other things should just be set to defaults. You don’t have to parametrize mappings if you just copy data from and to tables that have the same structure.

Of course, you can dynamically create them if you want, but it is a good practice to transfer data 1:1 – both structure and values from source to staging.

UPDATE WATERMARK ORA

Now we have to confirm, that load has finished and then update previous watermark value with the new one.

We will use a stored procedure. The code is simple:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

CREATE PROC [load].[usp_UpdateWatermark] @id SMALLINT, @NewWatermark DATETIME AS SET NOCOUNT ON; UPDATE load.cfg SET WatermarkValue = @NewWatermark WHERE id = @id; GO |

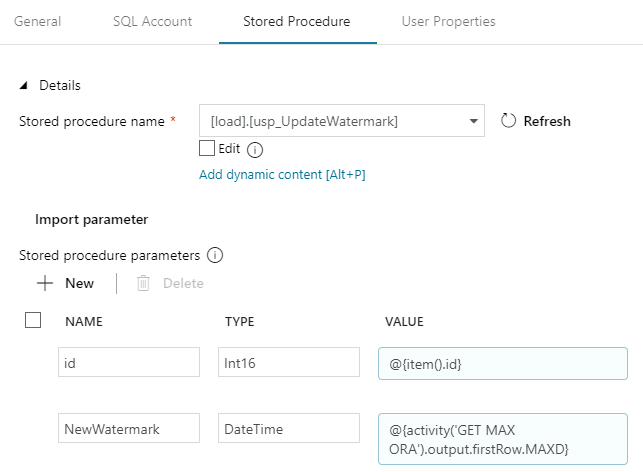

Create it on your Azure SQL database. Then use it in ADF:

- Drop

into project, connect constraint from COPY ORA into it. Rename as “UPDATE WATERMARK ORA” and view properties.

into project, connect constraint from COPY ORA into it. Rename as “UPDATE WATERMARK ORA” and view properties. - In SQL Account set

- Now go to “Stored Procedure”, select our procedure name and click “Import parameter”.

- Now w have to pass values for procedure parametrs. And we will also parametrize them. Id should be @{item().id} and NewWatermatk has to be: @{activity('GET MAX ORA').output.firstRow.MAXD} .

And basically, that’s all! This logic should copy rows from all Oracle tables defined in the configuration.

We can now test it. This can be done with “Debug” or just by triggering pipeline run.

If everything is working fine, we can just copy/paste all content from “FOR EACH ORA” into “FOR EACH PG“.

Just remember to properly rename all activities to reflect new source/destination names (PG). Also, all parameters and SELECT queries have to be redefined. Luckily PostgreSQL support ISO dates out of the box.

Source code

Here are all components in JSON. You can use them to copy/paste logic directly inside ADF V2 code editor or save as files in GIT repository.

Below is source code for pipeline only. All other things can be downloaded in zip file in “Download all” at the bottom of this article.

Pipeline

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 |

{ "name": "LOAD DELTA", "properties": { "activities": [ { "name": "GET CFG", "type": "Lookup", "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "typeProperties": { "source": { "type": "SqlSource", "sqlReaderQuery": { "value": "SELECT * from @{pipeline().parameters.ConfigTable}\nIF @@ROWCOUNT = 0 THROW 50000,'ojej...',1", "type": "Expression" } }, "dataset": { "referenceName": "SQL", "type": "DatasetReference", "parameters": { "TableName": "dummy" } }, "firstRowOnly": false } }, { "name": "FOR EACH ORA", "type": "ForEach", "dependsOn": [ { "activity": "ORA CFG", "dependencyConditions": [ "Succeeded" ] } ], "typeProperties": { "items": { "value": "@activity('ORA CFG').output.value", "type": "Expression" }, "isSequential": false, "activities": [ { "name": "COPY ORA", "type": "Copy", "dependsOn": [ { "activity": "GET MAX ORA", "dependencyConditions": [ "Succeeded" ] } ], "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "userProperties": [ { "name": "Destination", "value": "@{item().DST_tab}" } ], "typeProperties": { "source": { "type": "OracleSource", "oracleReaderQuery": { "value": "SELECT \n @{item().Cols} FROM @{item().SRC_tab} \n\nWHERE \n\n@{item().WatermarkColumn} > \nTO_DATE('@{item().WatermarkValue}', 'YYYY-MM-DD\"T\"HH24:MI:SS\"Z\"')\nAND\n@{item().WatermarkColumn} <=\nTO_DATE('@{activity('GET MAX ORA').output.firstRow.MAXD}', 'YYYY-MM-DD\"T\"HH24:MI:SS\"Z\"')", "type": "Expression" } }, "sink": { "type": "SqlSink", "writeBatchSize": 10000, "preCopyScript": { "value": "TRUNCATE TABLE @{item().DST_tab}", "type": "Expression" } }, "enableStaging": false, "cloudDataMovementUnits": 0 }, "inputs": [ { "referenceName": "ORA", "type": "DatasetReference" } ], "outputs": [ { "referenceName": "SQL", "type": "DatasetReference", "parameters": { "TableName": { "value": "@{item().DST_tab}", "type": "Expression" } } } ] }, { "name": "GET MAX ORA", "type": "Lookup", "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "typeProperties": { "source": { "type": "OracleSource", "oracleReaderQuery": { "value": "SELECT MAX(@{item().WatermarkColumn}) as maxd FROM @{item().SRC_tab} ", "type": "Expression" } }, "dataset": { "referenceName": "ORA", "type": "DatasetReference" } } }, { "name": "UPDATE WATERMARK ORA", "type": "SqlServerStoredProcedure", "dependsOn": [ { "activity": "COPY ORA", "dependencyConditions": [ "Succeeded" ] } ], "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "typeProperties": { "storedProcedureName": "[load].[usp_UpdateWatermark]", "storedProcedureParameters": { "id": { "value": { "value": "@{item().id}", "type": "Expression" }, "type": "Int16" }, "NewWatermark": { "value": { "value": "@{activity('GET MAX ORA').output.firstRow.MAXD}", "type": "Expression" }, "type": "DateTime" } } }, "linkedServiceName": { "referenceName": "AzureSQL", "type": "LinkedServiceReference" } } ] } }, { "name": "ORA CFG", "type": "Filter", "dependsOn": [ { "activity": "GET CFG", "dependencyConditions": [ "Succeeded" ] } ], "typeProperties": { "items": { "value": "@activity('GET CFG').output.value", "type": "Expression" }, "condition": { "value": "@equals(item().SRC_name,'ORA')", "type": "Expression" } } }, { "name": "PG CFG", "type": "Filter", "dependsOn": [ { "activity": "GET CFG", "dependencyConditions": [ "Succeeded" ] } ], "typeProperties": { "items": { "value": "@activity('GET CFG').output.value", "type": "Expression" }, "condition": { "value": "@equals(item().SRC_name,'PG')", "type": "Expression" } } }, { "name": "FOR EACH PG", "type": "ForEach", "dependsOn": [ { "activity": "PG CFG", "dependencyConditions": [ "Succeeded" ] } ], "typeProperties": { "items": { "value": "@activity('PG CFG').output.value", "type": "Expression" }, "isSequential": false, "activities": [ { "name": "Copy PG", "type": "Copy", "dependsOn": [ { "activity": "GET MAX PG", "dependencyConditions": [ "Succeeded" ] } ], "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "userProperties": [ { "name": "Destination", "value": "@{item().DST_tab}" } ], "typeProperties": { "source": { "type": "RelationalSource", "query": { "value": "SELECT @{item().Cols} FROM @{item().SRC_tab} \n\nWHERE \n\n@{item().WatermarkColumn} > \n'@{item().WatermarkValue}'\nAND\n@{item().WatermarkColumn} <=\n'@{activity('GET MAX PG').output.firstRow.MAXD}'", "type": "Expression" } }, "sink": { "type": "SqlSink", "writeBatchSize": 10000, "preCopyScript": { "value": "TRUNCATE TABLE @{item().DST_tab}", "type": "Expression" } }, "enableStaging": false, "cloudDataMovementUnits": 0 }, "inputs": [ { "referenceName": "PG", "type": "DatasetReference" } ], "outputs": [ { "referenceName": "SQL", "type": "DatasetReference", "parameters": { "TableName": { "value": "@{item().DST_tab}", "type": "Expression" } } } ] }, { "name": "GET MAX PG", "type": "Lookup", "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "typeProperties": { "source": { "type": "RelationalSource", "query": { "value": "SELECT MAX(@{item().WatermarkColumn}) as maxd FROM @{item().SRC_tab} ", "type": "Expression" } }, "dataset": { "referenceName": "PG", "type": "DatasetReference" } } }, { "name": "UPDATE WATERMARK PG", "type": "SqlServerStoredProcedure", "dependsOn": [ { "activity": "Copy PG", "dependencyConditions": [ "Succeeded" ] } ], "policy": { "timeout": "7.00:00:00", "retry": 0, "retryIntervalInSeconds": 30, "secureOutput": false }, "typeProperties": { "storedProcedureName": "[load].[usp_UpdateWatermark]", "storedProcedureParameters": { "id": { "value": { "value": "@{item().id}", "type": "Expression" }, "type": "Int16" }, "NewWatermark": { "value": { "value": "@{activity('GET MAX PG').output.firstRow.MAXD}", "type": "Expression" }, "type": "DateTime" } } }, "linkedServiceName": { "referenceName": "AzureSQL", "type": "LinkedServiceReference" } } ] } } ], "parameters": { "ConfigTable": { "type": "String", "defaultValue": "load.cfg" } } } } |

Download all

Hi Michal, you are absolutely correct, fileName declared as pipeline parameter and filled with value at sink destination. Folder path can be simplified:

“folderPath”: {

“value”: “@concat(‘/dev-raw-data-zone/oracle_erp_full_tables/’,formatDateTime(pipeline().parameters.windowStart, ‘yyyy/MM/dd’))”,

“type”: “Expression”

}

Want to thank you again, your example is a most complete, understandable and comprehensive learning guideline I was able to find on line.

Bruce.

Thank you. I am glad that I could help 🙂

How is it that you can have truncate table in your pipeline? Your case is not appending new records to a table that holds everything, but just coping new records to a staging table, right?

Simply removing truncate table statement would append records. it would work for system that never updates records. If records are modified and they come over again, there would be a need to do some merging or overwriting.

@De jan

Thank you for pointing that out 🙂

Of course, it always depends on our needs.

This example shows, that you can use “Pre-copy script” before loading will start.

In another case, this field could be empty or, for example, run partition switching or even partition truncating (yes, since MSSQL 2016 version we can truncate partitions).

ETL staging is a huge topic. I used to work with many scenarios in different projects, so maybe this was too obvious for me, that there is a choice 🙂

I have added relevant information under the example of “pre-script”. Thanks!

Hello Michał,

First of all, thank you very much for such a detailed guide. It’s been a great help for me while trying to implement an incremental load solution from Azure SQL Database to Azure SQL Data Warehouse.

However, if it’s not too much trouble, I’d appreciate some help in the COPY ORA section, step 3: “Also, do the same with Pre-copy script and put there: TRUNCATE TABLE @{item().DST_tab}”.

Could you please elaborate on why this TRUNCATE TABLE is needed? As it stands, my baseline version of the target table is being truncated and once the pipeline ends it remains empty.

I’m struggling to understand what the role of this TRUNCATE command is.

Thanks in advance.

Regards,

Pedro

Please disregard my comment since I had not read your reply to De jan.

@Pedro , no problem 🙂 It just only confirms how important it is to explain this step. Thanks!

Hello Michal

Thanks for sharing the post, it’s really nice,

Can you please suggest me something about:

I have a csv file in blob storage that is updated daily with change in name of file (e.g. Products.25-03-2018 and data is continuously changed every day). I created a data factory to take csv file from blob storage to Azure SQL database, I cannot figure out how I can resolve this issue? may be dynamic content?

if you can suggest me something to do, that would be great !

Second, in your example above if the source and sink is same that is Azure SQL database, how it will work?

Thanks in advance

Fraz

Hi Fraz!

So, if I understand it correctly, you have a problem with dynamic parametrization of a source file.

And the pattern of the filename is always: Products.DD-MM-YYYY.csv

If this is a case when you just need to take Products.25-03-2018.csv by the day 25.03.2018 and for that particular day pipeline will be run – then it’s a matter of using two (or three) functions for dynamic concatenation in your source file expression.

Look at the documentation here:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-expression-language-functions#date-functions

1. THE CURRENT DATE

You can always return the current date and time in UTC format using: utcnow().

Now the question is – do you have files with date taken from UTC timestamp or maybe it’s in another timezone? And if this timezone has daylight saving time you should also consider it in your expression. Depending on the answers you can use function addhours() because it looks like Data Factory expressions do not have any timezone aware date functions (yet?)

2. FORMATTING TO YOUR NEEDS

Then, after using utcnow() and anything else that will give you proper date, now you can format it to the string and using custom format.

Look at formatDateTime() function, you can use it with custom format declaration taken directly from .NET platform. Its described here: https://docs.microsoft.com/en-us/dotnet/standard/base-types/custom-date-and-time-format-strings

So finally it should like something similar to this:

formatDateTime(utcnow(), ‘dd-MM-yyyy’)

3. CONCATENATE STRING TO MATCH YOUR FILES:

So in the final stage, it should be concatenated with all names required to identify your file. Here we can use another function, but of course, you can use string interpolation explained in the article 🙂

concat(‘Products.’, formatDateTime(utcnow(), ‘dd-MM-yyyy’), ‘.csv’)

The answer to your question regarding if it does matter which db source and db sink you will have … Well in general – no, but of course I’m using some special functions like TO_DATE which does not exist in SQL Server so you have to replace anything that applies to ORACLE or PG with the proper replacement available in MS database 😉 That’s all, everything else should work just like in a example above ;]

Regards,

m.

Hi Michal

Thank you very much for the detailed explanation, I am going to try it today and if there is anything I could not do, I may bother you once more,

Thanks again, appreciate your support!

Fraz

My files are on ADLS gen 2 raw area. I want to copy few columns from individual files to a ADLS gen 2 STAGE area . I want to achieve it dynamically. I just have one copy activity. I want file mappings to be stored in sql table, read it and copy only required columns for individual files as per mapping.. Any help please.

If I understand correctly, you have to define exact column names of individual files on data lake storage to utilize schema/column mapping option of ADF. That way it can’t be dynamic. The only way seems to be with databricks.

Hi Sanjeet.

This requires a different approach.

The SQL commands are fortunately flexible, so you can construct a query as you like. Column lists can be just a definition hardcoded dynamically as a query string. Then we can easily store them just as a text in the database.

With semi-structured files like CSV this is something different. Unfortunately, we do not use SQL to query them.

Nevertheless, you need to dig deeper into the topic of schema mappings:

https://docs.microsoft.com/bs-latn-ba/azure/data-factory/copy-activity-schema-and-type-mapping

With some definitions stored in your database, you need to build dynamically a mapping section

"mappings": []. TIP: if your database support JSON formatting, maybe you should try to convert your query to JSON before you will retrieve mappings from config table. At least I would do it if I was to submit JSON in ADF 🙂Then you can pass this mapping definition as a dynamic parameter in your copy activity:

Just one more thing.

Remember that you can use Get Metadata Activity to dig into the specific file and check the structure.

https://docs.microsoft.com/bs-latn-ba/azure/data-factory/control-flow-get-metadata-activity

I didn’t test it, but it looks like ADLS Gen2 is supported and you can get

structureand evencolumnCount. This is something that can be used also to get something dinamically.I imagine a scenario, where I can use it to retrieve the structure and then parse it (with ADF expressions or maybe in my SQL Server Database with some additional JSON parsing commands) 🙂

Dear Michal,

Thanks, this article is really helpful.

Please if you could advise that if this will also take care of Updated columns ? i.e., if any value has been updated in source database then it would be updated in dest.

Regards,

Ashish

Ashish,

Hmm, If I understood you correctly, the answer is no.

This mechanism is using watermark columns to detect the change. It’s a simple one, if value has been changed since last upload – take the entire row.

Updates on source – that’s just a different issue and I do not describe it in my article, since it is one of the most common used this days.

So if you will update one column, for example COUNTRY_NAME, without marking the change in watermark column which is UPDATE_DATE, the change will not gonna be tracked and no update will be send to destination system.

Dear Michal,

Many thanks for your prompt response.

Apologies , I wasn’t clear in my query. My original query was that ‘If a column is updated in a table along with it’s watermark column would that update in target table as well?’.

For example if ‘COUNTRY_NAME’ has been updated along with watermark column which is ‘UPDATE_DATE’ would that be updated in target database ?

Thanks ,

Ashish

Thanks ,

Ashish

Ok, I think, that your question is related to the fact, that you are confusing stage load with replication 🙂 With my example you will copy entire row, from source table to the destination staging table. Table record on the destination is never updated. It’s always inserteded as a new row. This is a part of a process that is most common in data warehousing. Where you first load all rows with all column values first to the empty table, then accordingly to your needs, you merge or refresh partitions to your core tables updating old rows with new ones.

Thank you so much Michal , this is perfect article i have found on internet for Azure 🙂

To maintain our database , we will add one more activity in our flow which is to delete and insert updated rows from staging table to target data system 🙂

Thanks,

Ashish

That should be fine, as long as it fits your scale and time window.

You see, for bigger systems (over dozens of terabytes of storage, hundreds or even dozens of gigabytes of data to load), DELETE operations cost too much for the engine and generates bloat in your transaction logs.

In Azure Data Warehouse (or Parallel Data Warehouse, APS systems or any other MPP system) it’s better to divide the load into partitions and create them once again by UNIONing old and new data, switching the partitions and drop the old one. A good example is a usage of CTAS (create table as select) mentioned in good practices here: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-best-practices#minimize-transaction-sizes

You can try to build it also in your data warehouse, but of course only if scale will be (or is) that big.

That sounds more complicated to implement, but in the end is cheaper than DELETE process (BULK INSERT is always faster, and delete also requires mechanisms like ghost row deletion, which is in Sql Server (click here) or Vacuum process over dead tuples (click here) in PostgreSQL). But this is another topic 🙂

Hi Michal,

Great Article!!!

I have a question on timestamp column. Suppose we have thousands of tables for which timestamp column is not there and do not want to alter those tables to add such column as well.

Wow would i fetch the delta values for thousands of tables which doesn’t have timestamp value?

Please suggest

That is really tricky question because the answer is of course like this: “it depends”.

The first and most obvious scenario is to use the trigger on a table and create the logic to store updated row identifier and the timestamp somewhere else (in prepared structure).

But again, if we are talking about critical transaction system with the requirement of having as short INSERTS/UPDATES as possible – triggers are the ugliest and most inefficient way to do it. But sometimes there is no other way.

Anyway, if we are talking about SQL Server as a source, there are some other possible solutions, less cpu and io intensive than triggers. Change data capture or change tracking. You can check them in the documentation. But first read all requirements and limitations, there are always some points to consider.

this document may be useful: https://docs.microsoft.com/en-us/sql/relational-databases/track-changes/track-data-changes-sql-server?view=sql-server-2017

Dear Michal,

We have implemented this solution and this is working as expected , thank you.

Need a little help in SQL Procedure side to update the timestamp in Watermark table so we have created parameterised proc. and passing table name as well in variable, we had to create Dynamic sql to achieve this

This is giving us error ‘Conversion failed when converting date and/or time from character string’. We are using below syntax in our procedure

set @SQL = ‘update’ + @tablename + ‘set watermarkvalue =’ + @Newwatermarkvalue + ‘where id =’ + @id;

Execcute sp__executesql @SQL

please can you advise on any workaround for this

Regards,

Ashish

Hi Ashish,

first of all – what stands behind your parameters? What type they are and what values they have?

Your error message indicates, that you have a problem with conversion to date. But without any values, I cannot suggest any solutions.

Below is just a simple example, but it all depends on your scenario if this can be helpful or not.

It uses temporary objects and rollbacks at the end, so you can run it anywhere, anytime.

SET NOCOUNT ON;

BEGIN TRAN;

CREATE TABLE #test_watermark

(

id INT

DEFAULT 1,

watermarkvalue DATETIME

DEFAULT GETDATE()

);

GO

INSERT INTO #test_watermark

DEFAULT VALUES;

GO

CREATE PROC #up_watermark

(

@tablename sysname,

@Newwatermarkvalue NVARCHAR(100),

@id NCHAR(1)

)

AS

BEGIN

DECLARE @SQL NVARCHAR(MAX);

SET @SQL = N'UPDATE ' + @tablename + N' SET watermarkvalue = ' + @Newwatermarkvalue + N' where id = ' + @id;

PRINT @SQL;

EXEC sp_executesql @SQL;

END;

GO

SELECT *

FROM #test_watermark;

EXEC #up_watermark @tablename = '#test_watermark', -- sysname

@Newwatermarkvalue = '''2010-01-01''', -- nvarchar(100)

@id = '1'; -- nchar(1)

SELECT *

FROM #test_watermark;

ROLLBACK;

Hi Michal,

Thank you.

I have created below procedure and can see from ADF that below values are getting passed.

create PROCEDURE sp_UpdateWatermark

(

@id nvarchar(10),

@NewWatermark datetime,

@TableName NVARCHAR(200)

)

AS

BEGIN

SET NOCOUNT ON;

DECLARE @Sql NVARCHAR(500)

SET @Sql = ‘Update ‘ + @TableName + ‘set WatermarkValue=’ + convert(varchar(200), @NewWatermark,120 ) + ‘Where id =’ + @id;

EXECUTE sp_executesql @Sql

END

—————values passed from ADF ———-

“id”: {

“value”: “4”,

“type”: “String”

},

“NewWatermark”: {

“value”: “2019-08-01T12:37:43Z”,

“type”: “DateTime”

},

“TableName”: {

“value”: “CFG.DW_ACCOUNT_CHARGE_DTL_HIST_V”,

“type”: “String”

}

Hi Ashish,

ok, now i get it. So the problem is with the ZULU time passed from ADF expressions and you want to save the new value in the config table. That is strange, I remember that my solution worked out of the box in Azure SQL Database and no conversion was needed. Nevertheless, you can pass the date with zulu time as string, then convert it to date (as 127) and back to string (as 120).

SELECT '''' + CONVERT(NVARCHAR(100), CONVERT(datetime, '2019-08-01T12:37:43Z', 127), 120) + ''''Hi Michal,

Thank you so much 🙂

It worked like a charm..

regards,

Ashish

Hi Ashish!

That’s great! I am glad that I could help.

Hi Michal,

thank you. It’s me again 🙂 apologies for so many queries.

We have now copied data in our Staging tables. You have already suggested us about CTAS options to maintain data warehouse. Is that achievable in ADF itself to write ?

We want to update and insert in dimension tables in the same pipeline.

Regards,

Ashish

Hi Michal,

Please ignore my above question. I have created a parameterised procedure to achieve this in ADF pipeline 🙂

Thanks,

Ashish

Hi Ashish,

nice to hear that 🙂

regards,

m.