This post explains things that are difficult to find even in English. That’s why I will break my rule and will not write it in my native language! Po wersję polską zapraszam do google translate :>

Table of Contents

Introduction

Azure Automation is just a PowerShell and python running platform in the cloud.

In marketing language, it’s a swiss army knife 😛

Here how Microsoft describes it:

“Azure Automation delivers a cloud-based automation and configuration service that provides consistent management across your Azure and non-Azure environments. It consists of process automation, update management, and configuration features. Azure Automation provides complete control during deployment, operations, and decommissioning of workloads and resources.“

Apart from this gibberish, I will point out some important issues…

Know your Automation

- It has something that is called “a feature” – Fair Share – which basically prevent you from running scripts longer than 3 hours.

- Well, at least it will pause your script after 3 hours. And if you didn’t implement it as a workflow with some checkpoints – it will RESTART your script from the beginning.

- And if you implement checkpoints, it will resume your script from last known checkpoint. BUT it will do this only 3 times! So you are not able to implement logic that takes more than 9 hours to process…

- The workaround is to connect your own machine (server or laptop) as a hybrid worker.

Read more about fair share here: https://docs.microsoft.com/en-us/azure/automation/automation-runbook-execution#fair-share

Since Azure Data Factory cannot just simply pause and resume activity, we have to assume that pipeline will not run more than 3 hours.

Any other scenarios require you to write your custom logic and maybe divide pipelines to shorter ones and implement checkpoints between running them…

Preparations

Before we create runbook, we must set credential and some variables.

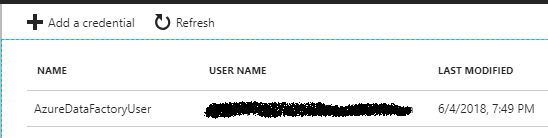

Adding credential

We have to set credential, that PowerShell will use to handle pipeline run in Azure Data Factory V2

- Go to Automation account, under Shared Resources click “Credentials“

- Add a credential. It must be an account with privileges to run and monitor a pipeline in ADF. I will name it “AzureDataFactoryUser”. Set login and password.

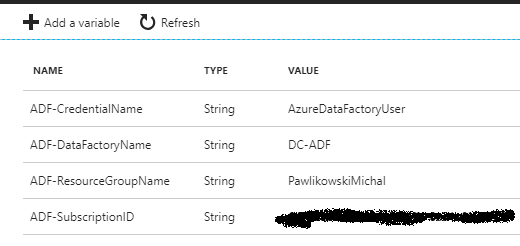

Adding variables

We will use variables to parametrize some account information just not to hardcode them in our script.

- Go to Automation account, under Shared Resources click “Variables“

- Add four string variables and set values for them. First will point to the credential name, second will provide data factory name, third – resource group name and fourth – ADF`s subscription id.

|

1 2 3 4 |

ADF-CredentialName ADF-DataFactoryName ADF-ResourceGroupName ADF-SubscriptionID |

Adding AzureRM.DataFactoryV2 module

You have to add powershell module to your automation account. Justo go to Modules, click “Browse gallery” and search for “AzureRM.DataFactoryV2“.

Select it from the results list and click “Import“.

Creating the runbook

Now we can create a PowerShell runbook.

- Go to Automation portal, under “PROCESS AUTOMATION” click “Runbooks“

- Select “Add a runbook“

- We will use quick create, so select “Create a new runbook“, then name it and select type as “PowerShell“.

- Use the script below in “Edit” mode, then save it and publish.

PowerShell script

Parameters

It has two parameters:

PipelineName – the name of the pipeline to run

CheckLoopTime – a number of seconds between checking status of a trigerred pipeline run

The code

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

param ( [Parameter(Mandatory=$true)][string]$PipelineName, [Parameter(Mandatory=$true)][int]$CheckLoopTime = 60 ) #Stop on any error! $ErrorActionPreference = "Stop" #Get and set configs $CredentialName = Get-AutomationVariable -Name 'ADF-CredentialName' $ResourceGroupName = Get-AutomationVariable -Name 'ADF-ResourceGroupName' $DataFactoryName = Get-AutomationVariable -Name 'ADF-DataFactoryName' $SubscriptionID = Get-AutomationVariable -Name 'ADF-SubscriptionID' #Get credentials $AzureDataFactoryUser = Get-AutomationPSCredential -Name $CredentialName try{ #Use credentials and choose subscription Add-AzureRmAccount -Credential $AzureDataFactoryUser | Out-Null Set-AzureRmContext -SubscriptionId $SubscriptionID | Out-Null # Get data factory object $df=Get-AzureRmDataFactoryV2 -ResourceGroupName $ResourceGroupName -Name $DataFactoryName #If exists - run it If($df) { Write-Output "Connected to data factory $DataFactoryName on $ResourceGroupName as $($AzureDataFactoryUser.UserName)" Write-Output "Running pipeline: $PipelineName" $RunID = Invoke-AzureRmDataFactoryV2Pipeline -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineName $PipelineName $RunInfo = Get-AzureRmDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID Write-Output "`nPipeline triggered!" Write-Output "RunID: $($RunInfo.RunId)" Write-Output "Started: $($RunInfo.RunStart)`n" $sw = [system.diagnostics.stopwatch]::StartNew() While (($Pipeline = Get-AzureRmDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID |Select -ExpandProperty "Status") -eq "InProgress") { #Write-Output $RunInfo = Get-AzureRmDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID Write-Output "`rLast status: $($RunInfo.Status) | Last updated: $($RunInfo.LastUpdated) | Running time: $($sw.Elapsed.ToString('dd\.hh\:mm\:ss'))" #-NoNewline Start-Sleep $CheckLoopTime } $sw.Stop() $RunInfo = Get-AzureRmDataFactoryV2PipelineRun -ResourceGroupName $ResourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $RunID Write-Output "`nFinished running in $($sw.Elapsed.ToString('dd\.hh\:mm\:ss'))!" Write-Output "Status:" Write-Output $RunInfo.Status if ($RunInfo.Status -ne "Succeeded"){ throw "There was an error with running pipeline: $($RunInfo.PipelineName). Returned message was:`n$($RunInfo.Message)" } } } Catch{ Throw } |

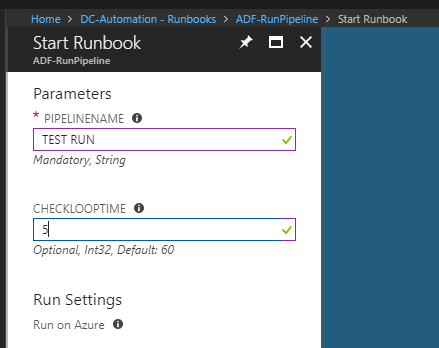

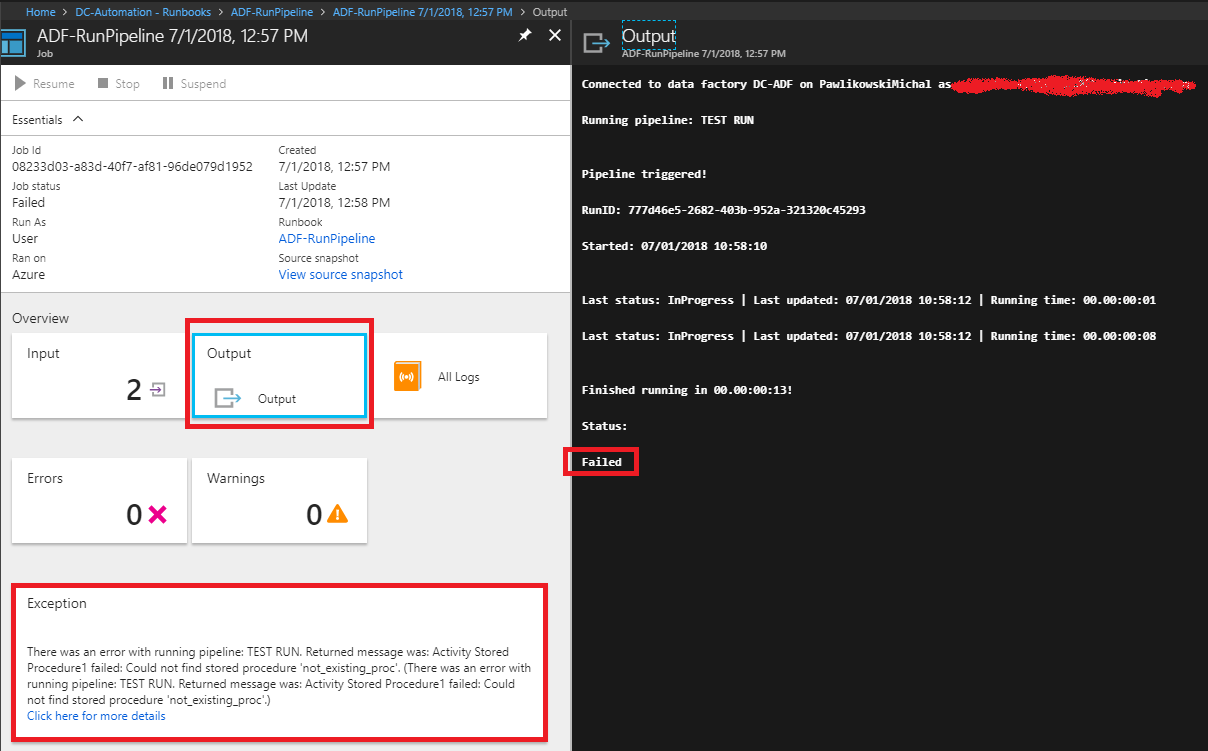

Test run

I will run my testing pipeline which simply starts wait activity (5 sec.) then will try to run unexisting procedure (after which pipeline should fail)

Go to saved runbook, click “Start“.

Provide parameters, like this one and click OK:

Runbook will be queued. Go to Output and wait for results.

What are the benefits to running the pipelines in runbooks vs in ADF w/triggers? I can see that maybe more control of the jobs running especially when there are dependencies on other pipelines to finish.

… And are there any significant downsides to running an ADF pipeline under a RunBook? Thanks

You could setup a webhook which can execute the runbook.

The webhook can be triggered via https url which can be triggered from anywhere.