TL;DR: Key Findings

- Every Databricks cluster node downloads over 12 GiB of data during startup.

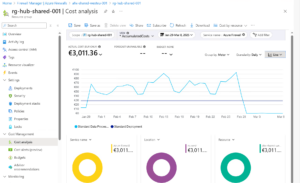

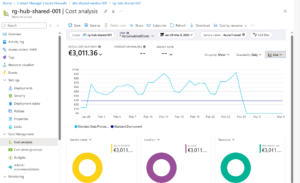

- When routed via Azure Firewall (Data Exfiltration pattern), this can lead to monthly costs exceeding €3,000+ just for control plane traffic.

- For one bank’s project, this behavior caused over 100 TB of unexpected traffic through the firewall.

- Root cause: startup scripts in VMs downloading packages/libraries/tools from internal blob storage endpoints used by Databricks.

- Best practices include:

- Private Endpoints for control plane traffic (AzureDatabricks azure tag) and direct outbound to Metastore, artifact Blob storage, system tables storage, log Blob storage, and Event Hubs endpoint IP addresses. Of course, when implementing private networking, traffic to DBFS and the Lakehouse should be routed exclusively via Private Endpoints or Service Endpoints – never through Azure Firewall or any other service that charges for data transfer. It’s crucial to remember that VNet peering introduces costs, even within the same region, and so does NAT Gateway. All of these components should be factored into cost planning and tested thoroughly before going live.

- Custom UDRs + Service Endpoints or Endpoint Policies (link) to control lakehouse traffic.

- Careful cost monitoring of VM-level network activity.

Introduction

While working on a Databricks implementation for a major banking client, I uncovered a critical but under-documented cost factor: the amount of data transferred by each VM during cluster startup. These transfers, routed through Azure Firewall, created a massive and unexpected cost spike in monthly billing.

The issue was directly tied to the way Databricks configures its clusters and communicates with Azure services during startup. This article documents our findings, cost implications, and suggestions to mitigate the problem.

Context: Secure Lakehouse Architecture for a Bank

I tried to design a secure, production-grade lakehouse environment aligned with Databricks’ Data Exfiltration Protection architecture. My key decisions included:

- Disabling public access to Databricks (“no public IP”)

- Using Private Endpoints for control plane communication

- Routing all required service traffic (Event Hub, SQL Metastore, Artifact Blob, Log Blob) through Azure Firewall

To control data paths:

- I implemented User Defined Routes (UDRs) to bypass the Azure Firewall for storage paths.

- Where needed, I combined:

- Private Endpoints (with caveat: requires VNet peering mesh)

- Service Endpoints + Service Endpoint Policies (to explicitly allow only approved services)

I assumed that control traffic would be minimal. That assumption proved costly.

Discovery: Anomalies in Azure Firewall Usage

In the client’s production environment, Azure Firewall data processing suddenly spiked.

- Over 100 TB of traffic was logged in a single month.

- Cost: more than €3,000 for firewall data processing alone.

I suspected:

- Misconfigured routing to Lakehouse storage via Firewall.

- Possibly unmonitored flows from Databricks clusters.

However, our routing was correct. So I recreated the setup in my own subscription:

Reproduction & Testing

- Subscription: My own (East US 2 region with lower pricing)

- Setup:

- Databricks Workspace (VNet-injected, no public IP)

- Azure Firewall

- Flow logs + NSG diagnostics + Traffic Analytics

- Single-node cluster (smallest size possible)

Result:

- My Databricks VM downloaded ~12.2 GiB at startup

- This download passed through the Azure Firewall

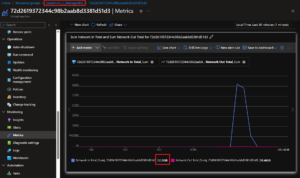

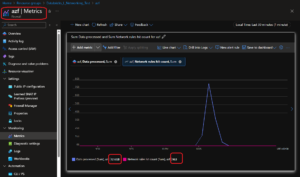

Screenshots confirmed:

- Multiple rule hits confirming firewall inspection

- Matching throughput & rule activity over time

- That CONFIRMS root cause what I experienced on customer’s production platform!

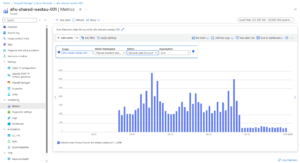

- Azure Firewall Network Rules Hit Count:

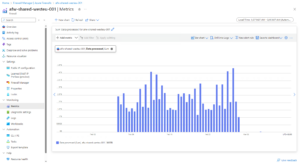

- Azure Firewall Data Processed:

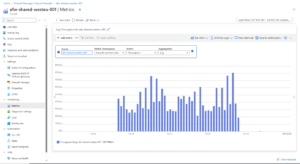

- Azure Firewall Troughtput (which btw looks like is 1Gbps):

- Cost analysis confirming €3,000+ for this traffic type:

Root Cause

Databricks startup scripts fetch a significant set of packages, libraries, and configuration components from secure internal endpoints, even before the cluster runs any user-defined job.

These interactions include:

- Installation tools

- Environment bootstrapping

- Logging and telemetry components

When routed through the Azure Firewall, these generate cost per byte.

Deep-Dive: Traffic Analytics and Destination IPs

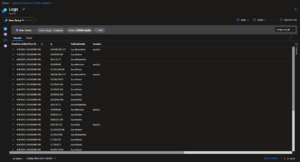

Using Azure Traffic Analytics, I was able to observe and confirm which public IPs were contacted by the VM during cluster startup.

Top Talking Pair:

I also extracted the list of all contacted IPs from NSG Flow Logs and Log Analytics:

CSV with full IP list: Databricks talked to these IPs

Top Contacted Azure Services

| Service | Count |

|---|---|

| AzureCloud | 22 |

| AzureDatabricks | 5 |

| AzureEventHub | 3 |

| AzureMonitor | 2 |

| AzureSQL | 2 |

Top IPs Contacted

| IP Address | Count |

|---|---|

| 104.208.181.177 | 3 |

| 20.41.4.115 | 3 |

| 52.239.229.100 | 3 |

| 20.49.99.69 | 2 |

| 20.209.68.161 | 2 |

| 20.209.69.193 | 2 |

| 20.209.69.196 | 2 |

| 20.209.69.161 | 2 |

| 20.60.237.161 | 2 |

| 20.62.58.132 | 2 |

All traffic confirmed as eastus2

NSG and VNet logs confirm full diagnostics coverage:

Lessons Learned & Recommendations

- Don’t underestimate control traffic. Each VM behaves similarly and scales with cluster size.

- Private Endpoints are your friend. For both control plane and storage. But they also generate cost and must be planned carefully. When using DBFS, a Private Endpoint is strongly recommended , especially as it’s the only secure option in most architectures where full network isolation is required. For Lakehouse access, however, it’s worth evaluating whether a Service Endpoint may be sufficient – because as long as the traffic stays within the same region as the Databricks VMs, no data transfer charges will be incurred.

- Use Service Endpoints + Endpoint Policies to restrict access paths.

- Validate with NSG Flow Logs and Traffic Analytics.

- Test cluster start behavior in isolated environments before rolling to production.

- Pay attention to region – East US 2 was used in this test for lower data egress pricing.

Conclusion

This situation was an expensive lesson in assuming network silence where there was heavy hidden traffic. Infrastructure decisions made with the best intentions (security, compliance, monitoring) can cause significant financial surprises if not backed with experimentation and detailed monitoring.

If you’re planning a secure Databricks deployment with restricted egress, include these startup flows in your cost estimations. Better to spend time up front than spend thousands later.

Hi Michał,

Would using Service Endpoint actually eliminate this hidden cost? i.e., SE should by pass Azure Firewall.

Thanks,

Mark

Hi Mark. In short: yes 🙂

SE overrides any BGP/UDR entries, see this link (and note box Inside it)

https://learn.microsoft.com/en-us/azure/virtual-network/virtual-network-service-endpoints-overview#logging-and-troubleshooting

Also this is why there is a policy to audit/prevent service endpoints creation. See description: https://www.azadvertizer.net/azpolicyadvertizer/Deny-Service-Endpoints.html

Costs aspects, as long it is not inter-region, it stays free:

https://techcommunity.microsoft.com/blog/coreinfrastructureandsecurityblog/service-endpoints-vs-private-endpoints/3962134