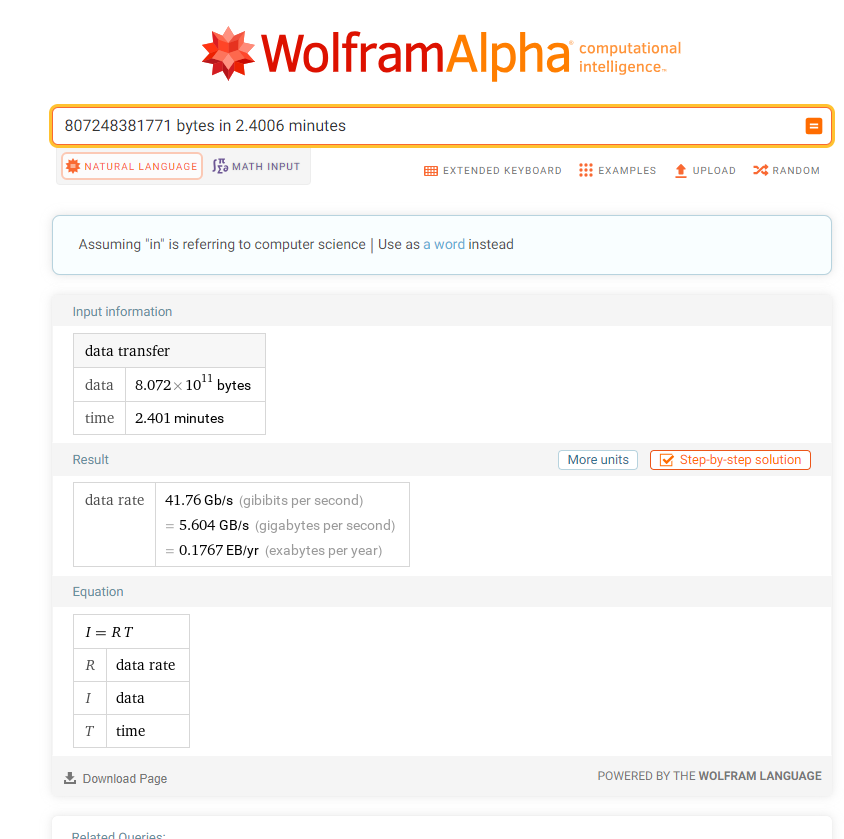

My recommendation: azcopy ! And no! You don’t need a machine with fast internet to mediate the transfer. The service allows data transfer through an internal backbone using the storage-to-storage API. My throughput was about 5.6 GB per second (yes, gigabytes, not gigabits)!

Of course, there are a few key prerequisites:

- If you will use OAuth (Azure AD/Entra ID account) to access both storage accounts, they must be in the same Azure tenant

- Public access or Allow Azure services on the trusted services list to access this storage account.

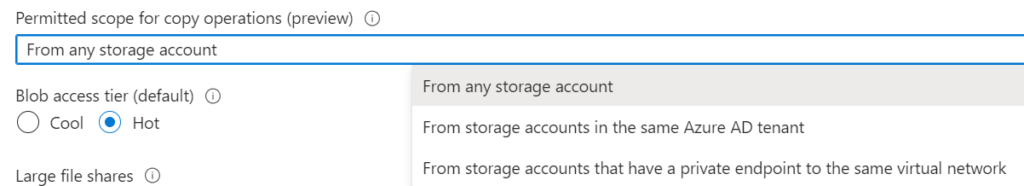

- The permitted scope must allow communication between accounts.

The first option is quite well-known, while the second one appeared quite recently.

So, how to make it work?

- Follow all the instructions on how to log in to both of your storage accounts: Get started with AzCopy

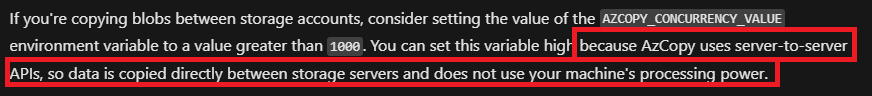

- Change the setting of AZCOPY_CONCURRENCY_VALUE to something bigger than 1000, details: Increase concurrency

- Run the azcopy copy command or azcopy sync between two storage accounts. Microsoft’s backbone connection will be used automatically.

Why is it so fast?

In the Increase concurrency doc page you can find this information:

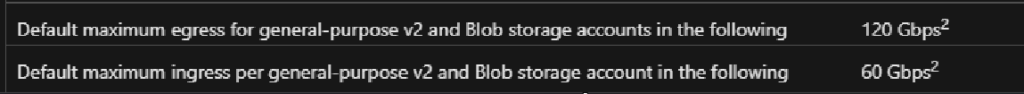

And the throughput of regular storage accounts in West Europe is provided here: Scale targets for standard storage accounts

How did it look in my case?

- I logged in using the ‘login’ command and an external tenant (B2B account), from my laptop, on my Ubuntu at Windows (wsl2):

1azcopy login --tenant-id "external_tenant.onmicrosoft.com" - Checked if my connection works, just by listing the content:

123azcopy list https://mysourcestorage.blob.core.windows.net/srcazcopy list https://mydeststorage.blob.core.windows.net/dst - Then set the concurrency value and run the copy command:

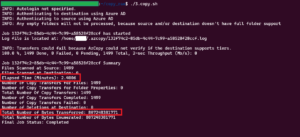

123export AZCOPY_CONCURRENCY_VALUE=2000azcopy sync "https://mysourcestorage.blob.core.windows.net/src" "https://mydeststorage.blob.core.windows.net/dst" --recursive=true - The result? Over 807GB of data was copied in less than three minutes 🙂

And Calculation to determine the speed: